Autonomous Driving & Artificial Intelligence Lab

The Autonomous Driving & Artificial Intelligence Research team develops safer, innovative and competitive solutions enabling the future of smart mobility. Our team is dedicated to researching novel technologies for autonomous vehicles.Members have been involved with developing technologies using artificial intelligence, machine learning and computer vision for automotive applications. The team has worked on several projects focused on finding end-to-end solutions to autonomous driving-related problems, this includes the development of both hardware and software. The team is vastly interdisciplinary including students from backgrounds such as electrical engineering, computer engineering, computer science and mechanical engineering. The team is led by Dr. Jungme Park who brings extensive experience having worked on autonomous vehicle technology in both academia and the industry.

A variety of projects such as sensor fused object detection, driver drowsiness detection and engine misfire detection are underway. The team specializes in using sensors such as cameras, radars and lidars to do sensor fusion for object detection. This has given us the know-how to collect and analyze enormous on-road sensor data. Members of the team also contribute to the university’s SAE AutoDrive Challenge team. Working on such advanced engineering has made surethat students working in this lab are well equipped to face challenges to solve mobility problems of the future.

PROJECTS

- Cooperative AI Inference in Vehicular Edge Networks for ADAS (NSF Award CNS–2128346)

- Traffic Light Detection System

- Camera Based Stop Sign & Stop Line Detection

- RADAR – Camera Sensor Fusion

- LiDAR – Camera based Object Detection

- Driver Monitoring System

- Application of Artificial Intelligence to Engine Systems

- Embedded Systems for Autonomous Driving

PUBLICATIONS

- Jungme Park, Bharath Kumar Thota, and K. Somashekar, “Sensor-Fused Nighttime System for Enhanced Pedestrian Detection in ADAS and Autonomous Vehicles,” Sensors 2024, 24, 4755. https://doi.org/10.3390/s24144755.

- B. Thota, K. Somashekar, and J. Park, "Sensor-Fused Low Light Pedestrian Detection System with Transfer Learning," SAE Technical Paper 2024-01-2043, 2024, https://doi.org/10.4271/2024-01-2043.

- Jungme Park, Mohammad Hasan Amin, Haswanth Babu Konjeti, YunshengWang, “Integration of C-V2X with an Obstacle Detection System for ADAS Applications,” IEEE Communication, Networks, and Satellite (COMNETSAT) 2024.

- Sai Rithvick Mandumula, Jungme Park, Ritwik Prasad Asolkar and Karthik Somashekar, “Multi Sensor Object Detection System for Real-time Inferencing in ADAS,” IEEE Symposium Series on Computational Intelligence (SSCI 2023).

- Jungme Park, Aryal Pawan, Sai Rithvick Mandumula, and Ritwik Prasad Asolkar, “An Optimized DNN Model for Real-Time Inferencing on an Embedded Device” Sensors 23, no.8: 3992, 2023. https://doi.org/10.3390/s23083992

- Jungme Park, Wenchang Yu, Pawan Aryal, and Viktor Ciroski, “Chapter 5: Comparative Study on Transfer Learning for Object Detection and Classification Systems,” in a book entitled “AI-enabled Technologies for Autonomous and Connected Vehicles”, Springer, 2022.

- Jungme Park and Wenchang Yu, “A Sensor Fused Rear-Cross Traffic Detection System using Transfer Learning,” Sensors, 2021 Sep 9; 21(18):6055. doi: 10.3390/s21186055.

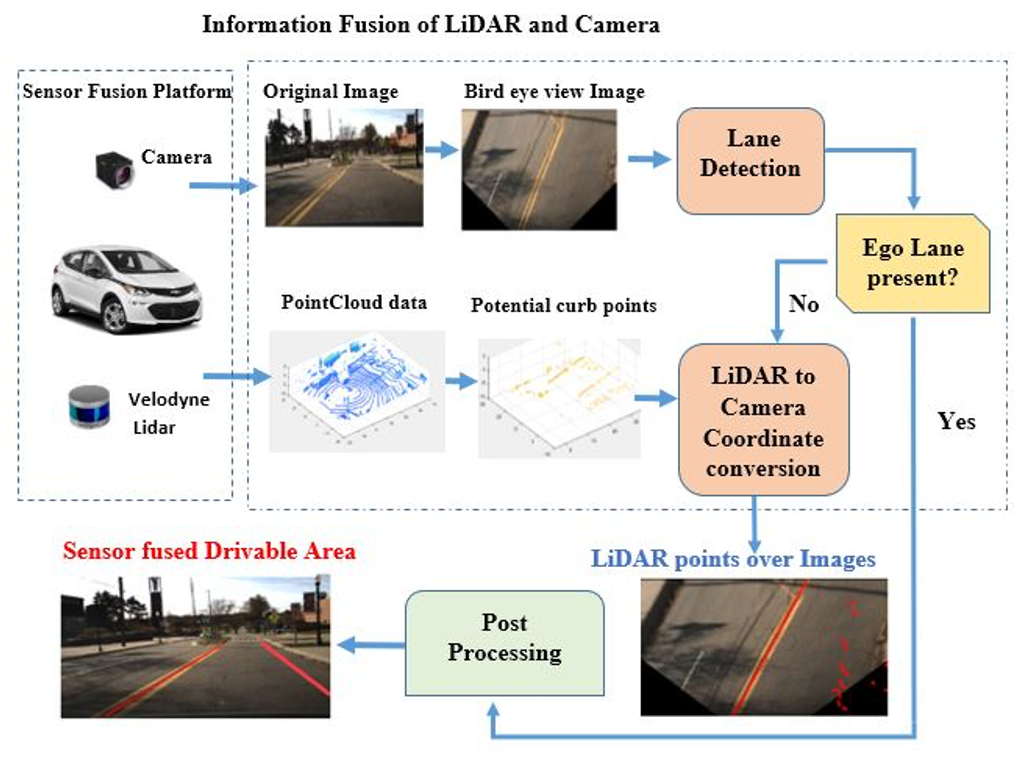

- Sriram Jayachandran Raguraman, Jungme Park, “Intelligent Drivable Area Detection System using Camera and Lidar Sensor for Autonomous Vehicle,” 20th Annual IEEE INTERNATIONAL CONFERENCE ON ELECTRO INFORMATION TECHNOLOGY, July 31-Aug 1st.

- Jungme Park , Sriram Jayachandran Raguraman, Aakif Aslam, and Shruti Gotadki, “Robust Sensor Fused Object Detection Using Convolutional Neural Networks for Autonomous Vehicles,” SAE Technical Paper 2020-01-0100, 2020, https://doi.org/10.4271/2020-01-0100.

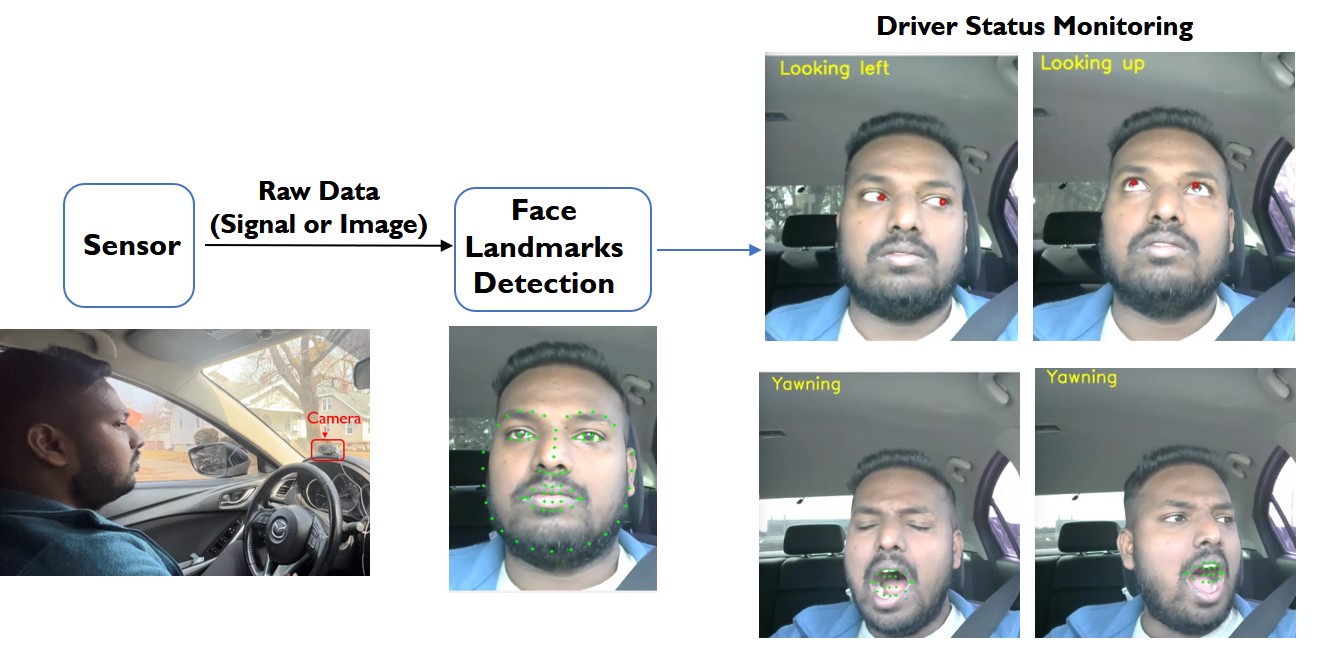

- Yogesh Jesudoss and Jungme Park, “A Robust Failure Proof Driver Drowsiness Detection System Estimating Blink and Yawn,” SAE Technical Paper 2020-01-1030, 2020, https://doi.org/10.4271/2020-01-1030.

- Viktor Ciroski and Jungme Park, “Lane Line Detection by LiDAR Intensity Value Interpolation,” SAE Int. J. Adv. & Curr. Prac. in Mobility 2(2):939-944, 2020, https://doi.org/10.4271/2019-01-2607.

- Ashwin Arunmozhi, Jungme Park, “On-road vehicle detection using a combination of Haar-like, HOG and LBP features and investigating their discrete detection performance,” 2018 IEEE International conference on Electro/Information technology, Oakland University, MI.

- Ashwin Arunmozhi, Shruti Gotadki, Jungme Park, “Stop sign and stop line detection and distance estimation for Autonomous driving control,” 2018 IEEE International conference on Electro/Information technology, Oakland University, MI

PATENTS

- US Patent, US11840974B2, "Intelligent Mass Air Flow (MAF) Prediction System with Neural Network," granted on December 12, 2023.

- German Patent, DE102015108270B4, "Prediction of Vehicle Speed Profile Using Neural Networks," granted on July 6, 2023.

- Chinese Patent, CN105270383B, "Speed Curve Prediction Using Neural Network," granted on October 11, 2019.

- US Patent, US9663111B2, "Vehicle Speed Profile Prediction Using Neural Networks," granted on May 30, 2017.

- US Patent Application, US20100305798A1, "System and Method for Vehicle Drive Cycle Determination and Use in Energy Management," published on December 2, 2010.

Project Objectives: This project has three thrusts

- In thrust #1, we focus on the study of distributed AI inference design. Our solution would jointly make use of the computing and communication abilities of modern Internet of Things (IoT) devices and edge server(s) to address the weak computing resource of IoT devices on running artificial intelligence (AI) applications, especially computer vision applications.

- In thrust #2, we focus on the study of the integration of distributed AI inference in vision applications and develop new methods that coordinate with edge computing.

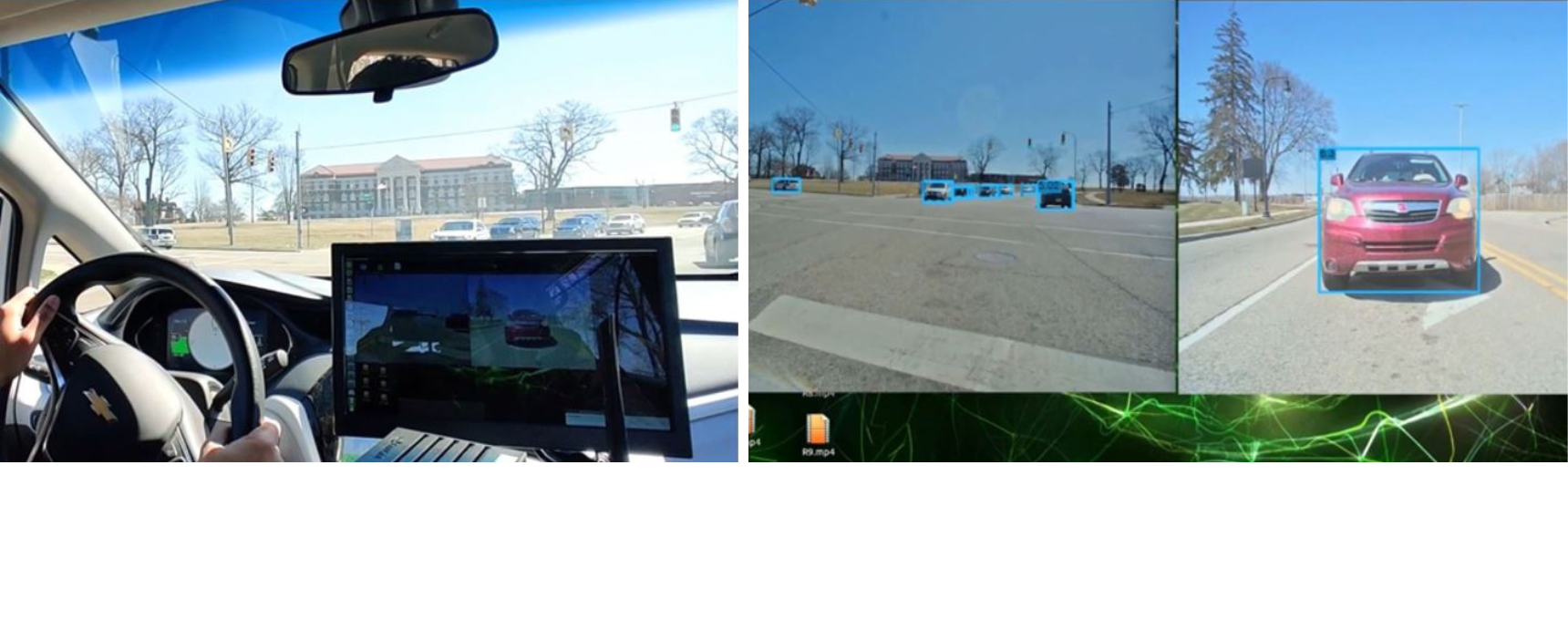

- In thrust #3, we focus on developing a vehicular edge computing platform to implement and validate the proposed research. Thrust 3 defines two main tasks: 1) An indoor testbed will be implemented to evaluate the developed algorithms extensively and prove their correctness. 2) An outdoor testbed will be built in the GM Mobility Research Center (GMMRC) at Kettering University (KU). The outdoor test bed will utilize one of the testing vehicles owned by KU, which is fully equipped with various sensors for autonomous driving research. To test and evaluate a cooperative AI inference system in real-world scenarios, V2X communication units will be installed at GMMRC for communication between an edge server and onboard vehicular computing units.

Year 3 Accomplishments:

Outdoor Testbed Implementation at GMMRC

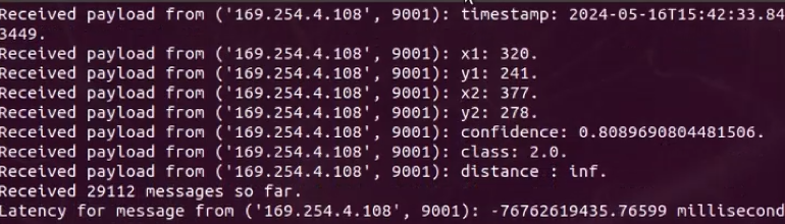

The outdoor testbed was implemented at the testing track, GMMRC, located at Kettering. To test and evaluate a cooperative AI inference system in real-world scenarios, Cellular V2X communication units have been installed on both testing vehicles and infrastructure to facilitate the exchange of critical traffic information, enhancing road safety.

- Infrastructure implementation for C-V2X: The roadside infrastructure at the intersection area in the GM Mobility Research Center (GMMRC) at Kettering University has been implemented. The infrastructure for C-V2X includes an edge computing unit, a roadside unit (RSU), and a stereo camera, all mounted on a pole.

On Board Units Set up for Testing Vehicles: The Cohda Wireless EVKs have been selected for the on-board units (OBUs), representing the latest in V2X OBU technology with C-V2X capabilities. The OBUs are integrated within vehicles, ensuring seamless communication with roadside units (RSUs) and neighboring vehicles, as shown in the figure. They are equipped with antennas and communication systems to receive and transmit data effectively, enabling various C-V2X applications. The OBUs are designed to harness C-V2X technology, providing a bandwidth of 20 MHz and operating in the 5.9 GHz ITS spectrum.

- Broadcasting Traffic Information by RSU: A camera continuously monitors the intersection area at GMMRC using a DNN-based object detection algorithm on the edge computing unit. The processed traffic information includes object locations, distances, and GPS coordinates. The edge computing device forwards the object detection results to the RSU for broadcasting. Subsequently, the RSU disseminates the traffic information to the OBUs installed in nearby vehicles at the intersection.

Plan for Year 4:

The Kettering team will conduct experiments on the client-server model in outdoor environments. Using the current hardware setup for the client and server computers, the team will explore the optimal DNN computing workload split between the client and server. Once the optimal DNN workload split is determined, the intersection collision avoidance system, which integrates cooperative AI and C-V2X, will be tested in real-world scenarios at the GMMRC testing track.

- This material is based upon work supported by the National Science Foundation under Grant Number #2128346.

- Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

Tracking of stationary and moving objects is a critical function of Autonomous driving technologies. Signals from several sensors, including camera, radar and LIDAR sensors are combined to estimate the position, velocity, trajectory and class of objects i.e. other vehicles and pedestrians. The techniques used to combine information from different sensor is called sensor fusion.

The main objective of our project is to find and track the moving objects i.e. cars, trucks in front of the ego vehicle based on information from camera and radar sensor. Using Deep Neural Network (DNN), the system has been trained to extract the features from the data collected using sensors, to detect the objects and classify it. The camera data is mainly used to understand the environment and it is easy to find the objects and classify them, but it is difficult to identify the objects in dark/night environment and in different weather conditions using camera. Therefore, radar is used along with camera sensor as it works very well in any weather conditions and velocity, range of the object also can be found. We used different latest DNN to train & test the system with combined sensor data and then validated the results to compare which network is more efficient. To verify the methods we used an Automotive RADAR and carried out the measurements in a lab and on the real road.

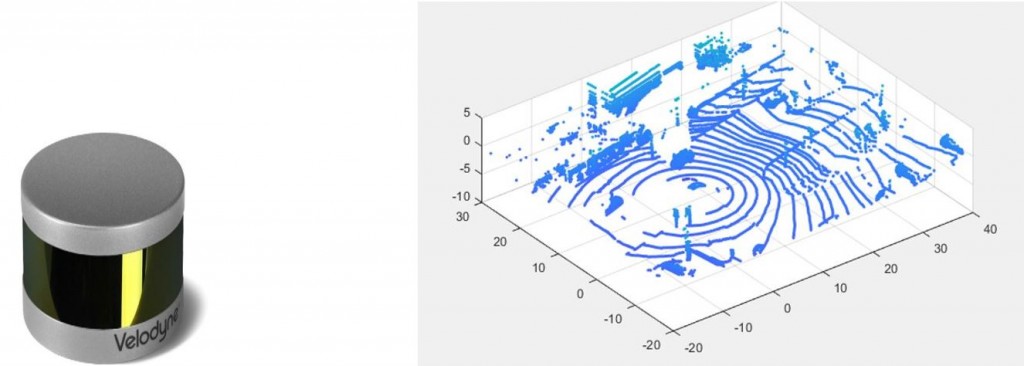

The primary objective of object detection is to track obstacles in real life situations. Camera can be used for this purpose but due to light conditions or in extreme weather conditions, the data obtained from the camera cannot be relied upon. For this purpose, we fuse sensors with different capabilities for robust object detection. The following is a screenshot of Velodyne LiDAR data.

LiDAR stands for Light Detection and Ranging. The way LiDAR works is that, it rapidly shoots laser beams at a surface. A sensor inside the LiDAR measures the amount of time it takes for each beam to bounce back. LiDAR provides the desired robustness and precision under harsh conditions.

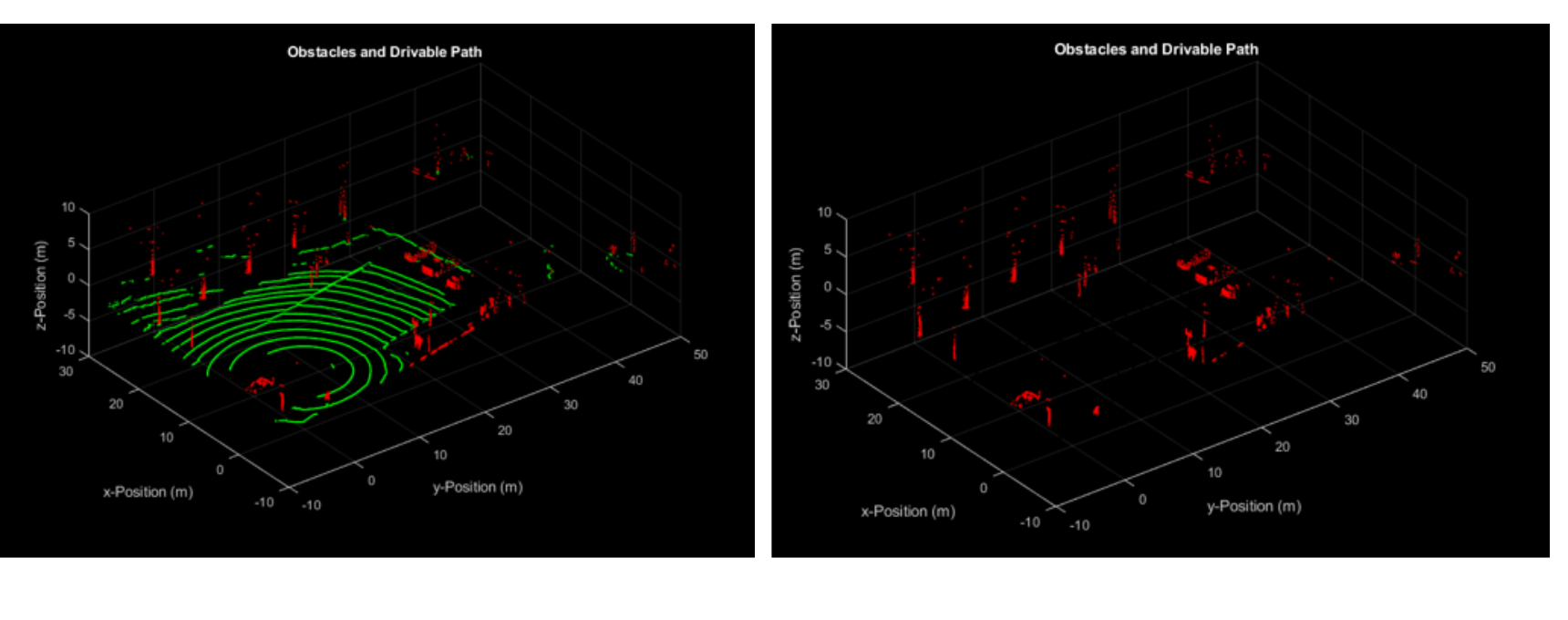

Drivable Area Detection System Using Camera and LiDAR

There are many roads without lane markings or stop lines. The goal of this project is to detect road boundaries to ensure that cars can travel safely in unstructured road environments. This work focuses on developing a framework that integrates Camera and LiDAR technologies, which can be implemented as a safety application for self-driving cars.

The objective of the study is to detect the awareness state of the driver . The method of detecting the awareness state of the driver can be classified in three major categories such as vehicle performance, physiological features detection and the facial features recognition. The vehicle performance study includes detecting the behaviour of the vehicle such as lane drifting, inappropriate steering, accelerator and braking output. This study does not consider the varying driving performances of the drivers. Driving behaviour changes from person to person and it adds a complexity of detecting the awareness state of the driver based on these inputs. The second method of detecting the physiological features such as taking an Electro Cardio Gram (ECG), Electro Encephalogram (EEG).These procedures are effective but intrusive making them impractical and extremely inconvenient for real time applications. The third method of image processing is non-intrusive and more accurate than the vehicle driving performance detector.

In this method the awareness state of the driver will be monitored by processing the image captured in real time. Deep learning procedures are to be deployed in detecting the awareness state of the driver and training the neural network based on the ground truths available in the training sets. Deep learning network architectures such as VGG16, FaceNet and ResNet are pre-trained on large datasets such as Labelled Faces in the Wild (LFW), Casia Webface detection dataset and Caltech dataset. The study focuses on detecting the drowsiness of the driver in stages. The stages are Face detection in the image, Face recognition and Drowsiness detection. In addition, future study will be focussed on detecting the face of the driver and processing the image in the night time. (IR cameras and processing methods are to be considered).

Intelligent Mass Air Flow (MAF) Detection System

Mass Air flow determination is needed for the better performance and controlling emissions of internal combustion engines. Current methods for MAF are based on the measurements from specific sensor i.e., mass air flow (MAF) sensor. But the MAF sensor drifts timely and gives inaccurate results, so it needs to be calibrated. The Fig. Below shows the Location of MAF Sensor in the engine intake system.

The main aim of this project is to build an Intelligent Mass Air Flow detection system using Artificial Neural Networks (ANN) through which the MAF can be accurately predicted. Eventually, based on the performance of the proposed system calibration can be performed on-line. Eventually, based on in-vehicle testing MAF sensor can be replaced by this system.

Intelligent Mis-Fire Detection System

With the ever-growing stringent norms for environment protection, Engine misfire detection is an important part to be considered to be executed in a vehicle real-time to have the emissions under control. The use of artificial intelligence is the way to go for real-time detection, but the challenge lies in having to run Neural Networks with the available processing power available in the engine This is the project we are working on to make sure the mis-detections are maintained under the specified threshold to assure low engine emissions.

Intelligent Fatigue Cycle Prediction System

Currently, fatigue life prediction methods for welded joints are primarily based on structural stresses. However, predicting fatigue can be complex due to the numerous factors involved in the welding process (e.g., current, voltage, wire feed speed, gas flow rate, material properties, etc.) and the stochastic nature of material failure under cyclic loading. Machine learning offers powerful tools for modeling and predicting fatigue in welded structures despite these complexities.

Developing Artificial Intelligence (AI) applications requires many procedures starting from data processing, feature extraction, machine-learning training, system verification, and model deployment to vehicles on the road. Each procedure involves processing large volumes of data and requires massive computing operations. A deep learning model contains a procedure to train a model and a separate procedure to deploy the trained model for the real-time service. When AI-trained models are deployed for automotive applications, they require low latency because response time and reliability are critical. Research on the various embedded devices is conducted to deploy the AD applications in real-time.